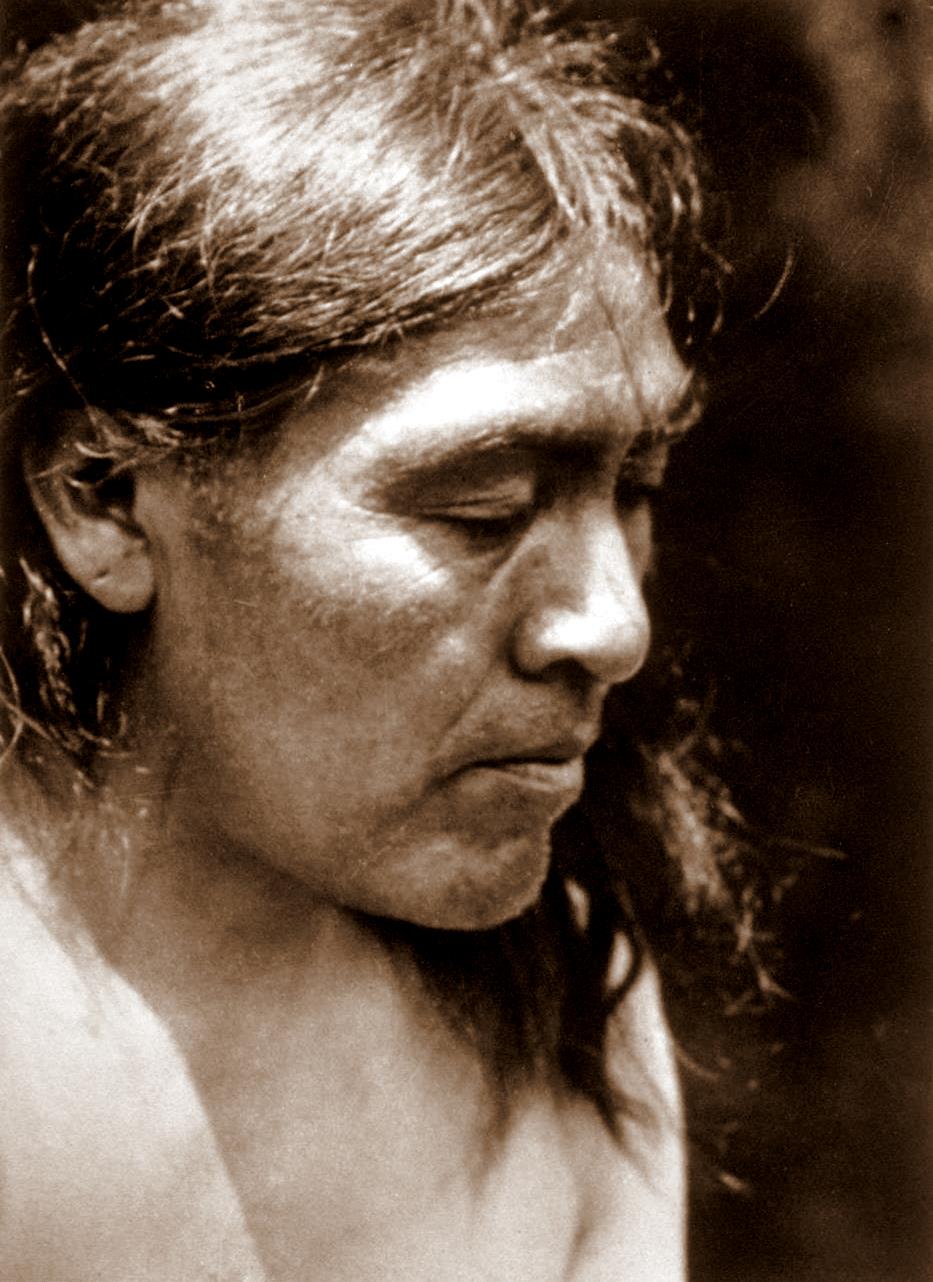

“My father is Awa. He is a great warrior, he defended the Waorani territory with spears. Now I must defend our territory and the forest with documents and law, speaking Spanish, and traveling far away like the harpy eagle”.

—Baihua, Waorani man of Ecuador (Korn 2018)

In 2018, I was in the Ecuadorian Amazon near the Napo River, traveling as a tourist. We were in a canoe headed towards an indigenous Kichwa community situated along the river. The last tour group to visit the community was offered live grubs. As an anthropologist that covers insect eating in my class, I knew I’d have to eat one of those live grubs as a point of honor. Palm larvae are large, plump white grubs with a dark head, and are eaten by many Amazonian peoples. On the long canoe trip to the village, I was going back and forth between visualizing eating a live palm larva and shutting it out of my mind completely. Finally, the moment came. We were seated in a communal hut, and the smiling Kichwa women passed around a basket of wiggling white grubs. But to my relief, they decided to cook them over a fire on sticks! They only vaguely resembled grubs once they were grilled and that made all the difference. It was no problem to scarf down a palm larva or two, and as it turned out, they tasted vaguely like bacon.

Every organism needs to get energy from its environment, which ultimately comes from the sun. Those ancient people who built monuments to the sun, at Stonehenge and Machu Picchu, were on to something. Gorillas get their energy almost entirely from plant leaves, with with an occasional insect here and there. Humans, being omnivores, can and do eat just about anything. Deciding what to eat has been called the “omnivore’s dilemma”, a term popularized by writer Michael Pollan. Today, about half of the world’s population lives in urban areas and buys food rather than produces food. Pollan illuminates just how W.E.I.R.D. our relationship with food is, by pointing out that we need investigative journalists to track down where it comes from and what’s in it. Pollan calls these “industrial foods.” Today during the age of coronavirus, more people are turning to urban foraging eating things like pigeons, wild fruits, and even the mustard weeds that can be seen all over Albuquerque. One concern is that these foods might be contaminated with pesticides or other chemicals. For some, urban foraging is more than a food source. It’s a way to spiritually connect with nature. Gabrielle Ceberville posts regular foraging videos on TikTok and has a following of more than 800,000. Ceberville (2021) explains, “Foraging is a way to make the natural world feel real and valuable to people. When you’re foraging for food or even just for fun, you’re recognizing that there is an entire ecosystem growing around you, and you start to take part in a carbon exchange with the natural world. I think it’s important to treat nature like a neighbor. Foraging is an important first step.” Another foraging sensation is Alexis Nikole Nelson, also known as the Black Forager, a Columbus-based social media influencer, who has over 800,000 followers on Instagram and 1.7 million followers on TikTok.

For most of human history, humans have been foragers. For millennia, societies foraged for food or produced their food and continue to do so. Anthropologists use the term subsistence to refer to how we go about getting food. Societies that produce or forage for their food are called subsistence economies.

Anthropologists use a classification system of different modes of subsistence. These include:

- Foraging (also known as hunting and gathering): reliance on wild resources

- Pastoralism: reliance on herding animals

- Horticulture: gardening using mostly human power and simple tools

- Intensive Agriculture: farming with the use of animal labor, plow, fertilizers, irrigation, or terracing

- Industrialized Food Production and Wage Labor: work for wages to buy food from others

These subsistence modes can overlap. For example, people can practice horticulture much of the year but supplement with hunted and gathered foods at other times. Or foragers can exchange foods with neighboring agriculturalists. Or people who work for wages can have a garden. While these categories are not mutually exclusive, they are useful to anthropologists because subsistence predicts other aspects of culture, including things like mobility, population size, how labor is organized, and social and political systems. Also of interest to anthropologists are how subsistence is changing and the consequences of those changes.

Foraging

Foraging or hunting and gathering simply means reliance on wild food resources. Foragers rely on human power to get energy from their environments and foraging is the oldest form of human subsistence. Foraging has been around for hundreds of thousands of years, whereas other modes of subsistence like pastoralism, horticulture, and intensive agriculture have only been around a few thousand years. Reliance on fossil fuels (petroleum, oil, and natural gas) as a source of energy is only a few hundred years old. Psychologists, biologists, and anthropologists believe this deep history of hunting and gathering has shaped our species both physically and psychologically. Historian Yuval Harari argues that we are foragers in body and mind, but industrialists in practice, which he blames for many of our modern problems. For that reason, the study of foragers has always been a cornerstone of anthropology. Of course, foragers are not living fossils stuck in the stone age, but modern humans living as complex dynamic lives as we do.

Foraging has continued into the 20th century, especially in those areas ill-suited or difficult to exploit for farming. Most foragers live in Arctic regions, deserts, and tropical rainforests. Today, however, very few people subsist by foraging alone. In The Foraging Spectrum, archaeologist Robert Kelly points out that nearly all forager societies today rely at least in part on non-forager economies such as agriculturalists, pastoralists, and industrialized nations. In short, foraging is a way of life that is dying. Traditional foragers or forager/gardeners of today and the recent past include the “forest people” (formerly called pygmies) of Uganda and the Congo, the Inuit of the Arctic (the Eskimo), the Hadza of Tanzania, the Huaorani of Ecuador, the Batek of Malaysia, and aboriginals of Australia to name a few.

A few groups of people remain virtually uncontacted by modern societies and resist encroachment by outsiders. To the south of the Amazon, the Ayoreo people of Paraguay avoid contact with outsiders but are pushed out by encroaching settlers. In remote areas of the Amazon Basin, foragers are being pushed out of their traditional lands by illegal logging, mining, agribusiness, and other industrialized operations. Some Waorani foragers of Ecuador have moved deeper into the Amazon and avoid contact with outsiders. Some fight oil companies with lawyers and legal documents. But with a shrinking land base, the Waorani find it more difficult to avoid each other, and violent clashes are thought by some Waorani to be inevitable. Though the rights of people to practice traditional ways are protected in Ecuador’s Constitution, other clauses stipulate that oil and mining operations cannot be obstructed. In addition, the fierce egalitarianism among the Waorani make it difficult for them to name people as their representatives under a unified tribal entity (Korn 2018).

The Sentinelese, who live on North Sentinel Island in the Andaman Islands located in the Bay of Bengal are some of the most isolated people in the world. The island is officially administered by India in 1996. Because of previous deadly encounters and because the Sentinelese could be devastated by mainland diseases, all attempts at contact are now illegal. Recently, an American man who intended to bring Christianity to the islanders was killed by bows and arrows (Schultz et al. 2018). Following the devastating tsunami of 2004 in the region, there was concern that the Sentinelese were wiped out. When a reconnaissance helicopter expedition flew over the island, a Sentinelese man emerged from the woods and aimed a bow and arrow at the helicopter. Though the Sentinelese had managed to survive the tsunami, their numbers remain uncertain.

Foragers often have certain features in common. For example, except those who live along the coast, foragers tend to be highly mobile; that is, they tend to move often to where they can find food. This is called an extensive use of land. That is, foragers tend to need a large area in which to forage. Because a lot of land is needed, mobile forager band size is rather small. Depending on the environment and season, mobile foragers might stay just a few weeks at a camp and houses tend to be temporary structures.

Mobile foraging societies also tend to be more egalitarian than societies associated with other modes of subsistence. There are not different classes or full-time political leaders. The term egalitarian means that a society has relatively few differences in status, wealth, and power between people. Lying in stark contrast to foragers are industrialized societies like the United States. The top one percent of people in the U.S., for example, control forty percent of the wealth. In The Last of the Cuiva, colonial settlers view the Cuiva (now called the Hiwi) foragers as wild animals because they wear no clothes and hunt and gather food. The Hiwi whose society is based on sharing, view the settlers as uncivilized because they refuse to share their food! Because there are no clear chiefs in forager societies it can be difficult to deal with governments and companies who are interested in buying or using their resources. And there is no centralized control over other members of the group. Typically when a person does something that counters the values of a forager society they are ostracized, a devastating punishment for a forager (Everett 2009). Even in industrialized societies not based on sharing, being cancelled or socially excluded can have devasting effects on a person.

Sometimes people in industrialized nations feel sorry for foragers, not so much because of their dwindling land problem, but because they’re not consumers–they don’t have much in the way of clothes, cars, phones, money, and so forth. And yet all their work goes directly to meet their needs or is shared with friends and family. In wage economies, as Vicki Robin and Joe Dominguez write in Your Money or Your Life, you trade your life energy for money. Happiness and satisfaction tend to be very high among foragers (if they have their original lands), while anxiety, drug addiction, and depression are common problems in societies where people trade their lives for money. When foragers become part of wage economies, often against their will, the resulting depression and alcohol addiction are disastrous. The Baka of Cameroon, displaced from their forest, are regularly paid in banana alcohol, and fetal alcohol syndrome is widespread. Alcohol poisoning is now a leading cause of death among the Baka (Gibbons 2018). Indeed, foragers worldwide are often paid by outsiders in alcohol. In his 2009 book Don’t Sleep There are Snakes, Daniel Everett tells of a Brazilian trader who wanted the Piraha foragers to work collecting Brazil nuts. Instead of paying them in goods, his plan was to pay them in cachaça, alcohol distilled from sugar cane juice, a practice that is illegal in Brazil.

Even though mobile foragers tend to be egalitarian, this does not mean that they are somehow naturally or biologically more generous than other groups of people. The English poet John Dryden (1631–1670) thought of hunter-gatherers as “noble savages,” a term he coined. A century later, Jean-Jacques Rousseau (1712–1778) saw hunter-gatherers as being in harmony with nature, unsullied by civilization. The noble savage idea is still with us today, but it fails to acknowledge the humanity and complexity of foraging people. A closer look at forager societies shows that they are not “naturally” egalitarian nor are they always generous. Foraging societies are based on egalitarianism and reciprocity, and have been known to work hard to ensure that no person gets too much power. Anthropologist Richard Lee learned that among the !Kung of the Kalahari (now called the Ju/hoansi), there is a practice called “insulting the meat.” As a Ju/hoansi man explained in the ethnography The Dobe !Kung:

When a young man kills much meat, he comes to think of himself as a chief or a big man, and he thinks the rest of us as his servants or inferiors. We can’t accept this. We refuse one who boasts, for someday his pride will make him kill somebody. So we always speak of his meat as worthless. In this way, we cool his heart and make him gentle.

Likewise, Robert Kelly (1995) explains that foragers ensure food is shared through accusations of stinginess, verbal abuse, and demanding a share of food. Among the Hadza foragers of Tanzania, men who make a claim to be chief are similarly mocked and humiliated. Rather than being inherently and nobly generous, Kelly describes foragers as “aggressively egalitarian.” Foragers will also avoid appearing stingy. In her classic work The Harmless People, Elizabeth Marshall Thomas writes, “A Bushman will go to any lengths to avoid making other Bushmen jealous of him, and for this reason, the possessions that Bushmen have are constantly circling among the members of their groups.” Other non-state societies stress sharing in part to keep social tensions down. Children’s games in Western societies often result in a single winner. As Lightning McQueen states, “One winner. Forty-two losers. I eat losers for breakfast.” Jane Goodale describes a very different game among the Kaulong of New Britain in the book To Sing with Pigs is Human (1995:119). Children are each given a banana. Instead of one child working to get the most bananas, the children each divide their banana in half, and then give it to another child. Then the half-banana is divided again, and half given to another child. The process is repeated until children are passing around tiny portions of a banana. The important thing in the game is not to get the most bananas but to form as many friendly relationships as possible.

One incentive to sharing is called tolerated theft. Tolerated theft occurs when the cost of defending a resource, like meat, is more than the benefit of keeping it. An example illustrates this simple concept. In a recent crime in Santa Fe, a man danced around another man who was eating a green chile cheeseburger (Resisen 2017). The dancing man then slapped the burger out of the other man’s hands and ran off with it. In that situation, the cost of defending the half-eaten burger, by running after the strange man, was greater than the value of the burger itself. One student in this class brilliantly explained tolerated theft at the Dollar Store:

The concept of tolerated theft can be seen in the modern world in the Dollar Store’s loss prevention measures. Unlike retailers like Walmart or Target, the Dollar Store does not invest in loss prevention measures like security guards or cameras, because the cost of defending their low-price items is not worth the price of defending them from shoplifters.

In forager societies, it is not easy to defend access to food or other resources. If someone tries to claim more than others, the group can ostracize the person, physically abuse him, or refuse to share later. Anthropologist Lorna Marshall suggested that !Kung women intentionally restrict their foraging to avoid being subjected to an onslaught of demands. Similarly, the Hiwi of Colombia will hide their belongings when another group visits, so as not to appear stingy (Last of the Cuiva 1970). While freeloading in mainstream American culture is frowned upon, among foragers being stingy is worse.

Another reason for sharing among foragers is reciprocity. Reciprocity entails the equal exchange of resources like food. In this type of exchange, there is an expectation of future sharing. This type of reciprocal food sharing often occurs when large game is brought in on a sporadic basis. Sharing then is not a benevolent gesture on the part of the hunter, but a strategy to offset his future shortfalls in food and also to form cooperative bonds with other people. Cultural anthropologist Bruce Knauft describes the importance of reciprocity among the Gebusi people of Papua New Guinea. The Gebusi have “exchange names” such that a person is referred to as “my bird egg,” “my fishing line,” or “your salt” depending on the item given or received. In this way, a person is constantly reminded of their reciprocal relationships and their social identity is tightly bound to reciprocity. Likewise, among the Napo Runa of Ecuador, the complex web of relationships is likened to a net bag or shigra, where everyone is connected in some way to someone else (Uzendoski 2005:5).

Television programs that feature one or two people trying to make it in the wild, don’t capture the essence of being a forager, because foraging is a group effort. When one person experiences a shortfall, he can count on others to share, and later reciprocate in kind. Reciprocity is not just a forager thing, but of course, is found in many traditional (non-industrialized) and industrialized societies. In this cultural anthropology course “The Art of Being Human”, cultural anthropologist Michael Wesch explains how gift giving in Papua New Guinea creates a web of relationships that bind people to each other. People feel a strong sense of interdependence through sharing and story-telling. In the United States, we simply pay someone for our coffee or cable installation, and the relationship is over. Trying to pay for a service in Papua New Guinea, where reciprocity and human relationships form the foundation of society, is offensive. As Wesch explains, it “ends a relationship.”

A third reason for sharing is that men who share meat (men are more likely to hunt large game, though there are many exceptions) stand to gain prestige. Even when the meat is “insulted”—when the hunter’s success is minimized— everyone is still aware of who the best hunters are (Kelly 1995). Good hunters tend to have higher status than others. This type of status is called achieved status because it derives from a person’s accomplishments during his or her lifetime. Achieved status provides one with influence rather than control. This contrasts with ascribed status in which status is inherited. Most Americans also have achieved status, unless you are a Kennedy or a Kardashian. Foragers are not able to accumulate much wealth, and thus inheritance of wealth and status is limited.

Forager Diet

Every culture has deep-seated notions about what is food and what is not food. Humans are omnivores capable of eating a wide range of plant and animal foods. Foragers eat a variety of foods and have remarkable knowledge of how to find food in even the most meager of environments. People living in industrialized nations often have no idea where their food comes from beyond the grocery store. Foragers, on the other hand, are food experts. A wonderful example of this ingenuity is how Australian aboriginals track a striped honey ant back to its nest. People dig out the honey ants, as they are called, and suck out the sweet liquid stored in their abdomens. In Australia, large moth larvae called a witchetty grub is eaten both raw and cooked and is a good source of protein. In the Amazon Basin, palm larvae are eaten both cooked and raw. In the American, Southwest people have traditionally eaten cicadas (Davis 1915:191). In places like Colombia and Ecuador leaf-cutter ant queens (hormigas colunas) are collected during their nuptial flight, and deep-fried. Westerners are often repulsed by insect-eating, but as one bee-keeping student succinctly put it, “honey is basically bee barf.”

Foragers have discovered ingenious methods of getting food. The blowpipe, used by foragers in the Amazon Basin and the forests of Southeast Asia, is especially good for hunting animals high in the forest canopy, like monkeys. Poison is obtained from trees, like curare of the Amazon, and poison dart frogs tip the darts and immobilize prey. Foragers of the Kalahari also use poison derived from a beetle to tip their arrows.

Anthropologists have pointed out that our forager forebears have shaped our preferences for food today. It makes energetic sense to go for calorie-dense foods like fatty meat, roots and tubers, and even sugar rather than leafy greens, even though they are good for use. That is, we have been shaped by our past to not want to eat salad unless it is covered in salad dressing, cheese, and nuts. Foragers also consider processing and handling time. If it takes more energy to crack a nut than the nut itself provides, it’s better to go for something else. Likewise, if it takes more energy to chase down a rabbit, skin it, butcher it and cook it, then it just isn’t worth it to hunt rabbits. We make these same decisions all the time as well, and is part of the reason fast food, with no handling or processing time and lots of fat and calories, is so hard to pass up.

Division of Labor

Forager society is often divided up not by class, but by age and sex. The sexual division of labor means that economic tasks are divided by sex or perceived sex. Hunting with weapons like arrows or spears is often a man’s task, though there are many exceptions. Agta women of the Philippines, for example, traditionally hunted large game like deer with bows and arrows, sometimes while carrying infants on their backs. Anthropologists Michael Gurven and Kim Hill explain in their article “Why Do Men Hunt?” that Agta women of the Philippines would traditionally hunt with dogs and target areas where men did not hunt, closer to their village. More commonly, women in forager societies tend to gather food, watch children, prepare meals, construct shelters and clothes, get fuel and water, transport items between camps, and hunt smaller game. Women’s gathered food resources often tend to be more predictable, while men’s hunting often provides more food, but on a less predictable basis. However, there is quite a bit of variation in the availability and importance of different food items among foragers.

Mothers and Others

A look at forager childcare is enlightening. Children in the Kalahari do little to no work, do not forage for food in any significant sense, and are treated gently. Children are a distraction on foraging trips, and there are few foods available for children to forage easily. In addition, the high temperatures of the Kalahari and the need for water make taking children on longer forays inefficient. Mothers and fathers supply children with most of their food well into their teens. In other forager cultures, children contribute more. For instance, anthropologists Kristin Hawkes, James O’Connell, and Nicholas Blurton-Jones have found that among the Hadza of Tanzania, older children can forage more effectively.

Childcare is not a solitary endeavor among foragers. In all known hunter-gatherer societies, mothers let others hold their babies, not so different from Westernized childcare. Alloparenting means that someone other than the mother provides help in caring for infants. Human foragers and industrialized nations lie in stark contrast to chimpanzees who diligently guard their babies against others. “Hadza mothers,” writes Frank Marlowe (2005), “are quite willing to hand their children off to anyone willing to take them.” Hadza grandmothers and fathers provide a considerable amount of childcare. Anthropologist Sarah Hrdy argues that in a species with high child mortality and offspring that are extremely costly to raise, namely Homo sapiens, this kind of cooperation makes sense. She goes on to argue that alloparenting in our human past shaped our emotional lives, honing our ability to read emotions and see motivations in others. This need for alloparenting may have contributed to us being such a highly social and cooperative species. Indeed, babysitting likely played a significant role in making us human.

Nasty, Brutish, and Short?

The political philosopher, Thomas Hobbes (1588–1679) in contrast to Rousseau, famously wrote that foragers represented primeval life in the state of nature and was “solitary, poor, nasty, brutish, and short.” It was once widely held that hunter-gatherers just didn’t have any spare time to elaborate their culture because they were so busy searching for food all the time. Agriculture, it was thought, released people from these time-consuming subsistence efforts and freed up time for other, more leisurely, artistic pursuits. Since the 1960s, anthropologists have known that some hunter-gatherers don’t put in the same long work days that agriculturalists or even industrialists do. African G/we hunters, for example, will hunt for about 5 hours a day, and some days people don’t forage at all. This apparent forager free time led anthropologist Marshall Sahlins to describe hunter-gatherers as “the original affluent society.” The calculation of work and leisure in any society is quite complicated, however, because abundance varies seasonally and other problems make work and leisure difficult to quantify. Also, different foragers live in different environments with different productive potential, and many have been pushed into areas where food is more scarce. Despite these difficulties, it is nonetheless clear that forager life isn’t always just about searching for food.

Another misconception about foragers is that they die young, rarely living past 40. This misconception is based on a misleading statistic called the average age at death, or average life expectancy at birth. The average age at death is exactly what it sounds like: you record all the deaths in a population over a period of time and take the average age at death. This statistic will be heavily influenced by infant and childhood deaths—these early deaths push the average age at death down into early middle age, even though many or most people are living into their 60s and 70s. This is especially true of populations living before the medical advances made in the 20th century. Among hunter-gatherers and, for that matter, all pre-20th century populations, the period between birth and 5 years of age is particularly fraught with risk. Among the pre-contact forest Aché foragers of Paraguay, former UNM anthropologists Kim Hill and Magdalena Hurtado estimated that 25 percent of the population died by age 5, while anthropologist Nancy Howell found that 33 percent of Dobe !Kung (Ju/’hoansi) children died by their fifth year. In some societies, early life is so precarious that infants are not even named until they are a year old or more.

The average modal age at death—that is the age at which most people in a given population die tells a different story. In 2007, UNM anthropologists Michael Gurven and Hillard “Hilly” Kaplan carried out a survey of 50 hunter-gatherer populations from around the world for which there was detailed census data collected. They found that the average modal age of death for these forager populations was 72, plus or minus about six years (ranging from about 66 to 78). These results suggest that foragers that survive the precarious time of childhood live to a fairly ripe old age.

Foragers in a Modern World

In a case famously described by Theodora Kroeber in her book Ishi in Two Worlds, an emaciated man stumbled out of the woods in northern California. His village was massacred by California settlers in 1865, he and his family survived by hiding in the woods for more than 40 years. When he finally emerged in 1911, he was nearly dead. Ishi, as he is known, was taken in by University of Berkeley anthropologist Alfred Kroeber. He was the last of the Yahi people and spoke a dialect of the Yana, an extinct native language of northern California. Ishi lived at the university, providing arrowhead-making demonstrations until his death from tuberculosis in 1916. Astonishingly, this process of removing foragers from their traditional lands continues today in the face of settlers, oil drilling, logging, mining, and building of hydroelectric dams. Foragers find themselves between two worlds as they try to maintain their traditional ways and advocate for their rights.

Mobile foragers require large areas of land to meet their subsistence needs—extensive use of land. Loss of land due to colonization, mining, logging, and the establishment of game preserves have all but eliminated the forager way of life. In 1961 Botswana, the Central Kalahari Game Reserve was created to protect wildlife, and the forager San people (Bushmen) were relocated to an area within the reserve. After time, the Botswana government said it wanted to integrate the San into a more developed life and stopped government support, plugged wells, and banned hunting and gathering, forcing the San out of the Reserve into settlement camps. The Botswana government said the San presence in the Reserve was inconsistent with the mission, but others suspected the government wanted to clear the area for diamond mining. Later in 2014, diamond mining began in the reserve. In 2006 the evictions were ruled unconstitutional, but only a limited number of foragers were allowed to return.

In a similar narrative, the former forest dwellers such as the Batwa of Uganda have been displaced by the encroachment of farmers and the establishment of Mgahinga Gorilla National Park and the Bwindi Impenetrable National Park. The Ugandan government held that the Batwa had no claim to land because they never settled permanently, overlooking the fact that mobile foragers do not establish permanent settlements. The Bwindi Impenetrable National Park became a World Heritage Site for the protection of endangered mountain gorillas (Gorilla beringei beringei). Gorilla tourism brings in money for the government of Uganda, but the Batwa have suffered as a result. The Batwa are not permitted to forage in the park, are not employed by the park, and cannot teach their children their traditional subsistence practices. Today the Batwa live on the margins of their ancestral lands or in slums as “conservation refugees”. Conservation refugees are people who have been removed from their traditional lands in the process of creating parks and refugees for animals and plants.

In another case, where animal conservation and foragers collide is the case of the Makah of Neah Bay, Washington. The Makah have an 1855 Treaty of Neah Bay with the United States that ceded some of their land but permitted the right to whale in “common with all citizens of the United States.” The Makah ceased whaling in the 1920s when the numbers of gray whales dwindled due to over-hunting by the whaling industry. More recently, the gray whale was removed from the endangered species list and in 1999 the Makah were allowed to hunt a gray whale. The hunt was denounced by some conservation groups. Since the hunt was not conducted for subsistence purposes, but rather for cultural reasons, the Sea Shepherd Conservation Society claimed the hunt an invalid and dangerous exemption to the Marine Mammal Protection Act.

A case where an indigenous people have successfully combined cultural and biological conservation is the Cofán of Ecuador. The Cofán are traditional forager-gardeners of the Ecuadorian Amazon. In the 1970s, Cofán territory was devastated by oil drilling, and some Cofán responded by establishing a new settlement and defending themselves against the illegal encroachment of oil companies. Instrumental in this process is American-Ecuadorian Randy Borman, the “gringo-chief” of the Cofán. Borman was born to American missionaries in Ecuador, is fluent in A’Ingae ,and is married to a Cofán woman. Borman has been able to both live the traditional life of a Cofan person and argue the case of the Cofan with governmental agencies, oil companies, and non-profit organizations. Borman and other Cofán created the FSC (Foundation for the Survival of the Cofán People) which helped to triple the size of Cofán territory, conduct scientific and conservation projects, and educate future leaders (Cepek 2012: 103). Today Cofán people manage and protect the biologically diverse Cayambe-Coca Ecological Reserve. The Cofan also provide community-based ecotours in which Cofán people act as ecological guides.

Foragers have also been faced with protecting their intellectual property. Foragers have a vast understanding of the plants and animals in their environments and have identified plant medicines, pesticides, preservatives, perfumes, sweeteners, and of course new foods. Intellectual property rights extend to indigenous knowledge of plants. And yet, “gene hunters” are subcontracted by large firms to acquire this knowledge from traditional societies to patent the gene or chemical and make a profit (Vishnu Dev 2016).

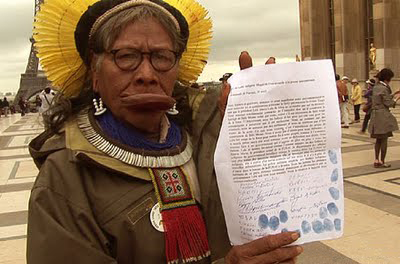

In Brazil, indigenous Kapaó leader Raoni Metuktire has fought for decades for the preservation of the rainforest, threatened by dams, logging, and cultivation. Although he and his people only became acquainted with Western culture in the mid-1950s, Metuktire has met with international leaders and has become a symbol of the effort to preserve the Amazon. In response to the recent Amazon fires, Metuktire (2019) wrote an opinion piece in the Guardian stating “For many years we, the indigenous leaders and peoples of the Amazon, have been warning you, our brothers who have brought so much damage to our forests. What you are doing will change the whole world and will destroy our home – and it will destroy your home too.” In 2019 Metuktire was nominated for the Nobel Peace Prize.

“Raoni holding his international petition against the Belo Monte Dam in Paris.” by Gert Peter-Bruch is licensed under CC BY-SA 3.0

Food Production

In contrast to the foraging lifestyle are the food producers. Food production, which relies on domesticated plants and animals, began around 10,000 years ago. Today, most people in the world rely on some form of domesticated food, a stark departure from our forager ancestors. Anthropologists divide up food producers into categories of pastoralism, horticulture, and intensive agriculture.

Pastoralism

Pastoralism means herding and raising animals. Pastoralists have domesticated herbivores including cattle, horses, reindeer, sheep, and goats, depending on the region. Traditional pastoralists include the Masai, Dinka, and Nuer of Africa, the reindeer herders of far northern Scandinavia (Sami) and Siberia (Eveny), and nomadic pastoralists of Tibet. Mobility varies with different animals and in different environments. Pastoral nomads move with herds from sources of water and food and lead a highly mobile existence. Transhumance pastoralists move animals between seasonal camps and often supplement with crops. Pastoralism often occurs in open semi-arid region where farming is difficult.

Typically, pastoralists do not kill their animals very often for food, but rather rely on their milk and blood. In one practice, animals are “bled” without killing them and the blood is mixed with milk. When an animal is killed, it is often shared during a feast usually accompanied by a ritual. As with foragers, sharing among pastoralists confers prestige as well as sets up reciprocal obligations.

Horticulture

Horticulture is farming with the use of simple hand tools and human labor on temporary farm plots. Horticulture first began more than 10,000 years ago around the end of the last ice age. In addition to simple farming, horticulturalists often supplement their diet with some hunting, raising domesticated animals, and selling goods. Horticulturalists move their semi-permanent farm plots to another area as soil fertility in the original plot decreases. This practice is called shifting cultivation, or swidden, and allows farmland to rejuvenate over time. To improve the soil, horticulturalists will often practice slash and burn, clearing and burning vegetation except for the largest trees before farming a plot of land. In general, areas that are conducive to slash and burn horticulture are ill-suited for long-term use of farm plots, which require large amounts of fertilizer and pesticides to sustain the soil. Because they produce more food in a given area, horticulturalists use the land more intensively than foragers. As a result, horticulturalists tend to live in more dense communities than foragers, whose populations tend to be small.

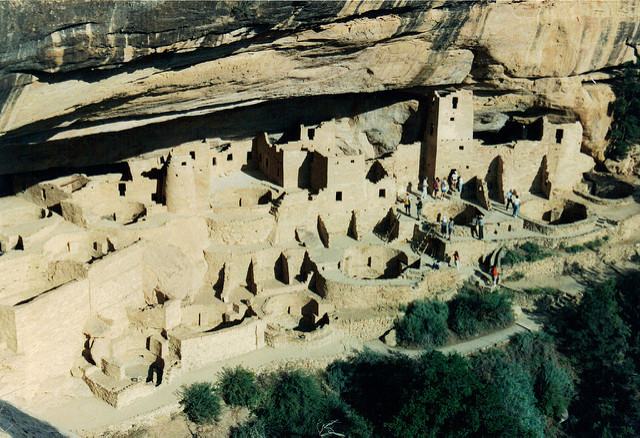

Horticulture has recently been practiced in the Amazon Basin, Papua New Guinea, central Africa, Samoa, and Southeast Asia. Farmers in the American Southwest practiced horticulture as well, relying on floodplain water for their fields. Because horticulturalists maintain fields for several years before moving to a new plot, architecture tends to be more permanent than mobile foragers. Horticulturalists often use techniques like multi-cropping and planting certain crops together to boost productivity and minimize erosion and soil depletion.

Intensive agriculture

Early intensive agriculture began in Mesopotamia (modern-day Iraq), Egypt, India, and China. Intensive agriculture differs from horticulture in that it continues to use the same plot of land rather than periodically shifting plots. Intensive agriculture produces more food per unit of land than does horticulture. It accomplishes this by people working harder, adding animal labor, and applying farming innovations to keep up soil fertility. Innovations to improve soil productivity include fertilizers, plows, irrigation, and terracing (creating steps out of a hillside for gardens). All these inputs require a great deal of time and energy to build and maintain. Fields must be weeded and pests like mice, rabbits, and grasshoppers driven out. Domesticated animals must be fed, housed, bred, and cared for. Irrigation ditches and terraces must be engineered, excavated, and maintained. Fertilizers must be collected and spread on the fields and farming equipment must be made and maintained. The plow is an important innovation, allowing farmers to reach nutrients below the topsoil. Rather than moving periodically to rejuvenate the soil, efforts are increased to ensure soil productivity over the long run.

What’s more, intensive agriculturalists tend to grow storable grains. Storage units have to be created, maintained, and kept free from vermin. In addition, the grains must be processed on a grinding stone to get the most food value from them. This can entail grinding grains for many hours a day. That said, it is clear that agriculture did not free people from heavy labor, quite the contrary. In Homo Deus, Yuval Harari likens the expulsion of Adam and Eve from Eden to work “by the sweat of your brow”—forever leaving behind a forager lifestyle. In this light, agriculture hardly liberated people from the hard labor of farming, allowing them more leisure time to pursue their passions and develop the arts and sciences. Quite the contrary, people were working harder than ever, investing more time and energy as intensive agriculturalists compared with foragers and horticulturalists. On the other hand, more people could be supported per unit of land, and food could be more easily stored and defended, providing some food security during shortfalls and lean seasons.

Consequences of Intensive Agriculture

There are consequences of intensifying production, even before the introduction of fossil fuels, pesticides, and monocropping. Because intensive agriculturalists do not move, investment in architecture tends to increase and more permanent settlements emerge. Staying in one place is called sedentism. Instead of the movable tents or simple brush structures, mobile foragers have, intensive agriculturalists often build houses from clay bricks, stones, or other more permanent materials. This can be seen very clearly in the archaeological record. When maize began to be relied upon in the American Southwest, people started building more permanent structures living in and storing food that we call pueblos. Differences in wealth and power also emerge with intensive agriculturalists in contrast to the egalitarian pattern of foragers.

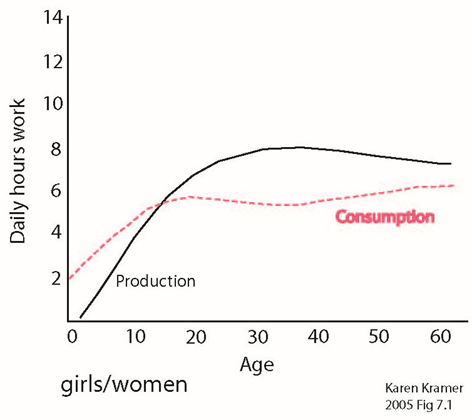

Populations tend to increase with the onset of pre-industrialized intensive agriculture as well. Not only can more people be supported per unit of land, but anthropologist Karen Kramer argues that children of intensive agriculturalists can do many tasks that offset the costs of raising children. Girls in agricultural societies process grain, fetch water and fuel, care for children, cook, wash clothes, and other tasks. In her book Maya Girls, Kramers shows that around age 13, Maya girls begin to produce more than they consume. When electricity came to a Maya village, grinding maize, a task that girls performed for hours a day, became mechanized. As a result, the age of menstruation, which is a direct result of food intake and energy expenditure, dropped by 1.5 years. This drop reveals the considerable amount of energy that Maya girls were spending on household tasks. Kramer argues that Maya girls were underwriting the cost of their parents having more children, resulting in population growth.

Forager children in contrast typically do not contribute to the household economy until relatively late in life. For instance, Ache boys of Paraguay don’t produce more than they consume until around the age of 17. Ache women and girls never produce more food than they consume. In essence, Kramer argues that intensive agriculturalists can afford more children than foragers. This idea remains the subject of some debate because Hadza hunter-gatherer children have been shown to forage for a considerable amount of their daily intake and so environment likely plays a key role in children’s production in both forager and agricultural societies. While there is a tendency for mobile forager children to do little work and for children of agriculturalists to do a lot of work, different environments will affect the nature of children’s labor.

Zoonotic Diseases

Intensive agriculturalists often use animal labor in addition to human labor, coming into contact with animal meat, blood, and feces. These close quarters with animals have the consequence of increasing the occurrence of zoonotic diseases. Zoonotic diseases are those that can be transferred between humans and animals. The diseases typically start in animal populations but shift when a genetic mutation allows them to jump to humans. Zoonotic diseases are very common and can be disastrous for human populations. The infecting agents can be parasites, fungi, and viruses. Examples include bubonic plague, Lyme disease, hantavirus, ebola, zika, HIV/AIDS, polio, malaria, yellow fever, mad cow disease, rabies, smallpox, swine flu, bird flu, toxoplasmosis, and chicken pox. Related, the build-up of garbage is a problem for pre-industrial intensive agriculturalists and urban dwellers alike. Garbage dumps called middens can be found in the archaeological record, the physical remains of the past, adjacent to settlements. Middens are a treasure trove of information for archaeologists. For people living near these dumps, however, they can become vectors of disease, containing animal and human waste and attracting vermin like cockroaches, rats, and mice. Cats, who prey on these vermin, began living in human settlements 9,500 years ago, around the time that humans began farming and storing food (Ault 2015).

Today, with more than 7 billion people on the planet, we live in more densely packed urban centers than ever and are connected globally. When epidemics start, like the 1918 Spanish flu epidemic that killed more people than World War I, the results can be difficult to contain. Given the unprecedented connection between people today, there is a call for a global system of response to deal with potential epidemics. Economist Jeffrey D. Sachs, Professor of Sustainable Development, Professor of Health Policy and Management, and Director of the Earth Institute at Columbia University calls for a system of global readiness and response, which includes funding for the production and distribution of vaccines.

Other Effects of Agriculture

As agriculture intensified, people began to store foods, especially grains. Populations grew denser, and people could defend those resources from outsiders. Note this contrasts with the tolerated theft of foragers who can’t easily defend their hunted foods. Differences in wealth and status begin to emerge (as evidenced by the archaeological record) as some people had better land and higher production than others. Craft specialists including scribes, soldiers, priests, and bureaucrats emerge; these people do not produce food for themselves, but rather produce a service or product in exchange. Ruling, merchant, and peasant classes eventually arise along with the concept of personal property. For the ruling class, status and prestige become at least partially ascribed, inherited at birth.

Unilineal Evolution

As European nations came into contact with and began to colonize North and South America, Africa, and India, they encountered people who were physically and culturally unlike themselves. This led to the question, why do people in different parts of the world look and behave differently? The concept of unilineal evolution developed out of this question. Unilineal evolution is based on the late 17th and 18th century Enlightenment idea of progressivism—that humans are advancing toward intellectual and moral perfection. One version of this framework was that humans progressed through different stages from “savagery” to “barbarism” to “civilization,” with civilized society at the top of the pinnacle. In this ranking system, the more you controlled nature, the more civilized you were, and the more you represented a model of social and moral perfection.

The idea of unilineal evolution was espoused by anthropologist Lewis Henry Morgan and others in the late 1800s, in part based on archaeological discoveries of a progression from stone tools to metal ones in Europe. Not surprisingly, the adherents of this idea considered themselves members of the most intellectually and morally advanced group—the civilized sort. Under this mindset, early anthropologists decided that foragers of their day were still languishing in the stone age with all the supposed mental and moral deficits that went with it. Unilineal evolution bears no resemblance to Charles Darwin’s (1809–1882) ideas about evolution through natural selection. Quite the contrary, Darwin, who encountered many different peoples on his travels aboard the Beagle, pointed out the remarkable similarities in humans that he encountered.

Unilineal evolution was not just an ivory tower idea but had real-world consequences. In the 1904 Louisiana Purchase Exposition in St. Louis Missouri, there was an exhibition of living cultures which including Apaches from the Southwest, the Tlingit of southeast Alaska, and a man named Ota Benga a Mbuti native (formally called “pygmies” for their short stature) of the African Congo. Ota Benga was a personable man with a set of sharpened pointed teeth, a result of a coming-of-age rite of passage among the Mbuti. More than 19 million people attended the event. Geronimo was also part of the Exposition, and it is said that he liked Ota Benga so much that he gave him one of his arrowheads.

After the Exposition, Ota Benga went to the Bronx Zoo where he did odd jobs. Unfortunately, Ota Benga was harassed by tourists and had taken to targeting bullying children with tiny arrows shot from a miniature bow he had made. As a result, Ota Benga ended up as an exhibit in the “Monkey House” at the Bronx zoo as part of a publicity stunt. One editorial in the New York Times called to mind unilineal evolution by stating that “The pygmies … are very low in the human scale” (The Guardian 2015). According to the New York Times, an African-American clergyman wrote in protest, “We think we are worthy of being considered human beings, with souls”. Following public protest, Ota Benga was released and went to live with a family in Virginia. By that time, he wanted to return to Africa, but travel became impossible once World War I broke out. Distraught, Ota Benga removed the caps that had been put on his pointed teeth, built a fire, and shot himself. No one knows where his remains are today.

Anthropologist Franz Boas (1858–1942) rejected unilineal evolution and the false distinction between “primitive” and “civilized” people. Boas had worked with Inuit and Northwest Coast societies and wrote descriptions of those cultures called ethnographies. He appreciated their intricate worldviews and histories, comprehensive knowledge of their environments, and complex social and political systems. In short, he knew people were people and could not objectively be ranked on scales of intelligence or morality, and that traditional societies weren’t somehow less than human.

Through his first-hand experiences, Boas created the term “cultural relativism.” Cultural relativism is the idea that cultures need to be understood within the context of that culture. The flip-side of cultural relativism is ethnocentrism, passing moral judgment on a culture based on one’s own culture. Cultural relativism is not about passing moral judgment or giving cultural practices the unexamined stamp of approval but rather is intended as a tool to understand why people do what they do. Franz Boas’ insistence that human cultures be understood on their own terms earned him the title “father of American anthropology.” Boas also championed what was called salvage anthropology, working to record cultural practices that were quickly disappearing in North America and elsewhere with European colonization, removal of people from aboriginal lands, language decline, the decimation of populations by infectious diseases, and in some cases, outright genocide.

While the idea of unilineal evolution may seem like a relic of the past, the notion continues today. Jair Bolsonaro, the president of Brasil stated in 2020 that “The Indian has changed, he is evolving and becoming more and more, a human being like us.” Bolsonaro believes the land allotment of the indigenous people in Brazil is too large and hopes to open it up to mining logging, and other industrial ventures.

The Anthropocene

The Industrial Revolution truly revolutionized how we get food. For most of human existence, human power and relatively simple technology has sustained our species. Increasing human labor, animal domestication, and technological innovations like the plow increased production per unit of land and allowed populations to grow. Today, reliance of fossil fuels to power machinery to clear land, and produce food has changed our lives entirely. What’s more, industrialization is changing the face of the planet in unprecedented ways. Most dramatically, humans have altered the ratio of animal species to favor our lifestyle and dietary habits. Worldwide there are 780 million pigs, approaching a billion cattle, and 21 billion chickens making them the most common bird in the world. The wild ancestor of domesticated cattle, the auroch, went extinct in the forests of Poland in 1627. The wild ancestors of camel, sheep and house cat are on the brink of extinction (Francis 2015). Animals that we enjoy as companions far outnumber their wild counterparts. For instance, in recent years there have been about 95 million cats in the United States alone. Globally, there are around 200,000 wild wolves, but 400,000,000 domesticated dogs. Humans themselves numbered around 1.6 billion in 1900, but number around 7 billion today.

Other species that do not contribute as immediately to human appetites, like Sumatran tigers (~500, Panthera tigris sumatrae), mountain gorillas (1000 Gorilla beringei beringei), and northern white rhinos (3 in captivity, Ceratotherium simum cottoni) are on the brink of extinction. Przewalski’s horse (Equus ferus przewalskii, 250 in the wild), the only horse that has never been domesticated and is strikingly similar to horses in early European cave paintings, is also close to extinction. In a recent article in the Atlantic, science writer Peter Brannen (2017) points out, “today wildlife accounts for only 3 percent of earth’s land animals; human beings, our livestock, and our pets take up the remaining 97 percent of the biomass.” Human populations are expected to reach 9 or 10 billion by 2050, increasing the pressure on non-domesticated animals through encroaching settlements, mining, logging, poaching, the illegal pet trade, and other pressures, creating further homogenization of biological life. For more information on threatened species see the IUCN’s (International Union for Conservation of Nature) redlist.

Other changes are taking place as a result of human intelligence and cooperation like the rise of cities, massive dams, huge mining operations, irrigated farms, ocean acidification, surface temperature increases, ocean dead zones, deforestation, the composition of the atmosphere, and ozone depletion. Ice cores from Antarctica indicate higher levels of greenhouse gases today than in the last 500,000 years. If we reach an increase of 4 degrees Celsius, the use of the planet will change dramatically resulting in extinctions, crop failures, starvation, and huge migrations of people would also follow. It is not just scientists who worry about the human impact on the earth’s systems, but foragers as well. As Henry Rosas a Mastigenka (hunter-gardeners) man of the Peruvian Amazon puts it, “We ourselves have to realize that it is we human beings ourselves that are poisoning everything or else we will ourselves. We will kill ourselves unless we wake up” (Proyecto EBA Amazonía 2016).

Humans have become the most important agent of ecological and climatological change (Harari 2017). We may be a speck in the galactic sense, but we have transformed our speck. Geologists divide time into epochs based on changes in rock layers (also called strata) that correspond to changes in climate and environment. Technically speaking, we live in the Holocene. Yet observing how we have transformed our planet, scientists are now proposing that we are living in a new age called the Anthropocene— the age of humans. Never before has a single species been responsible for altering its ecosystem on such as massive scale. We have become a geophysical force on par with volcanoes and asteroid impacts.

Geologists use fossils and chemical signatures to identify different geological periods. What might be the signs that future geologists will use to define the Anthropocene—the future fossils? Three contenders include fossilized chicken bones, increased radiation levels, and possibly plastics. The Working Group on the Anthropocene is currently debating which signatures will likely prevail. This is a difficult prospect since our lifetimes are so minuscule compared to geologic time. The start date of the Anthropocene is also currently being debated. Some suggest that European contact with the Americas and the resulting exchange of agricultural products and death of an estimated 50 million people from disease should mark the official start. Others think the beginning of the Industrial Revolution in 1710 is more appropriate. Still, others think 1950 is a solid starting date with the onset of nuclear testing, increased use of plastics, fertilizers, and concrete, along with deforestation, rising carbon dioxide emissions, and increases in domesticated animals like chickens. Wherever you place the starting point, it is clear that humans have had an outsized impact on the planet.